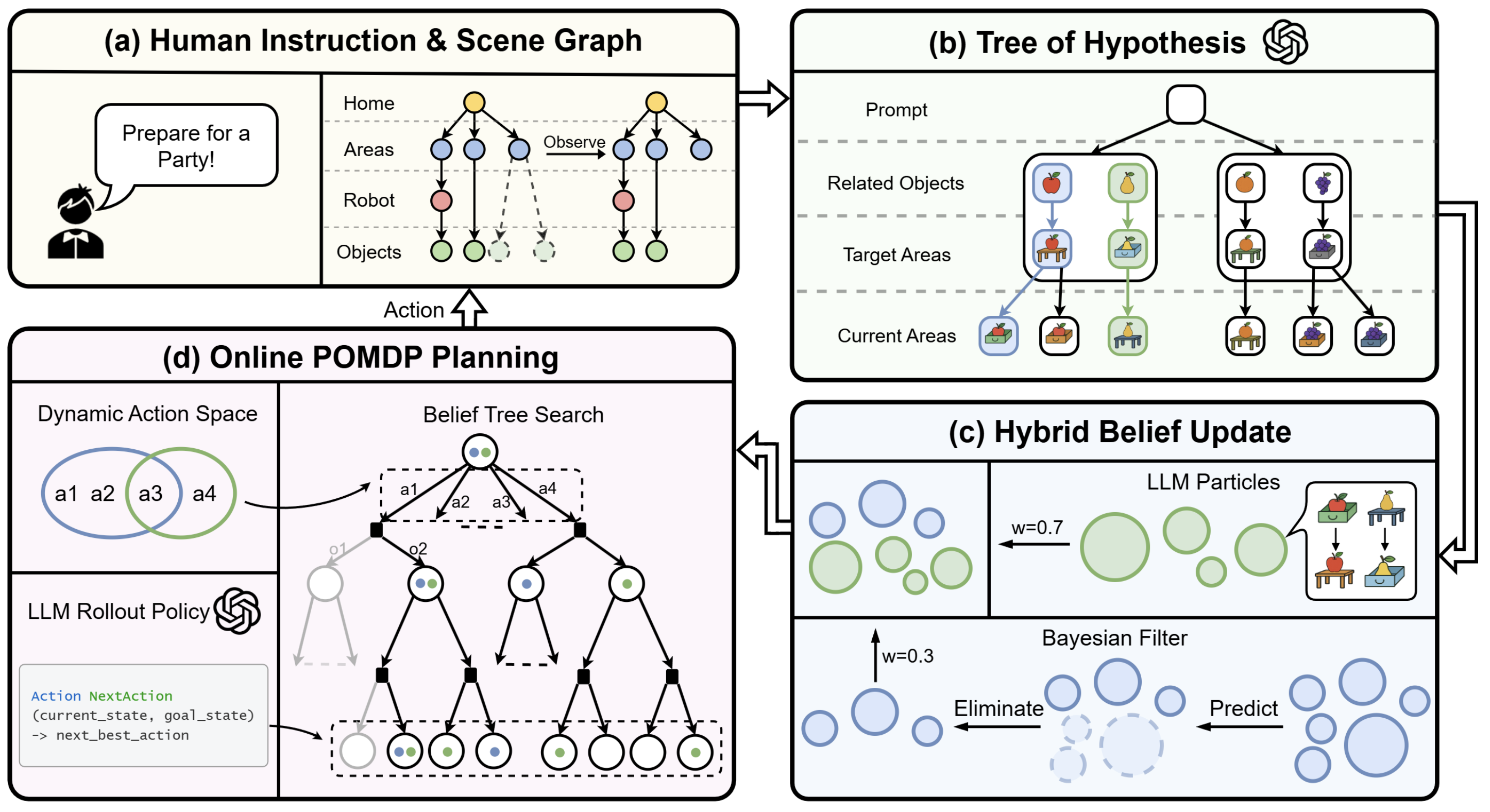

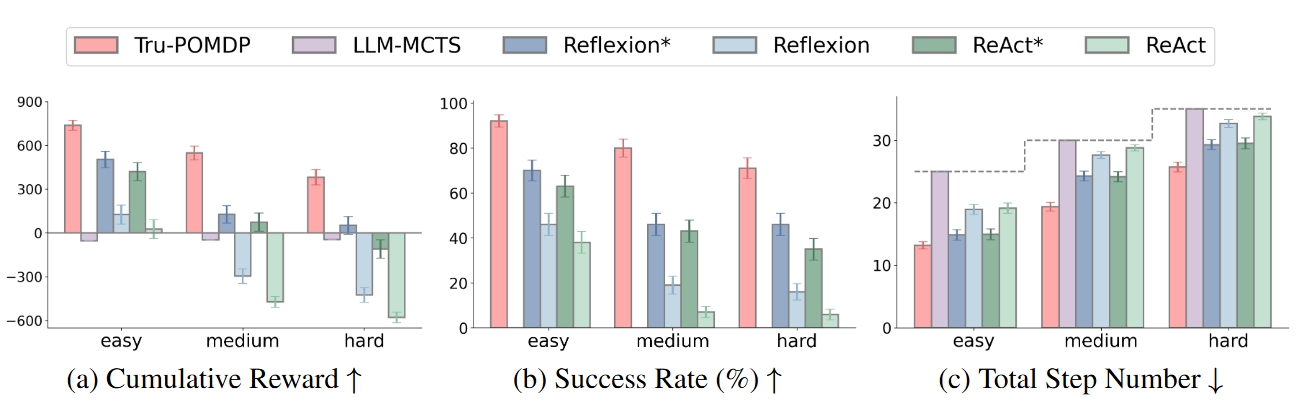

we propose Tru-POMDP, a new algorithm for task planning under uncertainty that tightly integrates commonsense reasoning by LLMs with explicit belief tracking and principled POMDP planning. Our main contributions include:

- The first framework to integrate LLM-based reasoning with principled POMDP planning for household tasks.

- A novel hybrid belief modeling approach that integrates LLM-generated hypotheses with principled Bayesian filtering.

- A POMDP model for open-ended object rearrangement tasks and a practical belief-tree search planner for solving such tasks efficiently under large-scale uncertainty.